How do I prevent specific pages from getting indexed by search engines?

To prevent specific pages from being indexed by search engines, follow these steps:

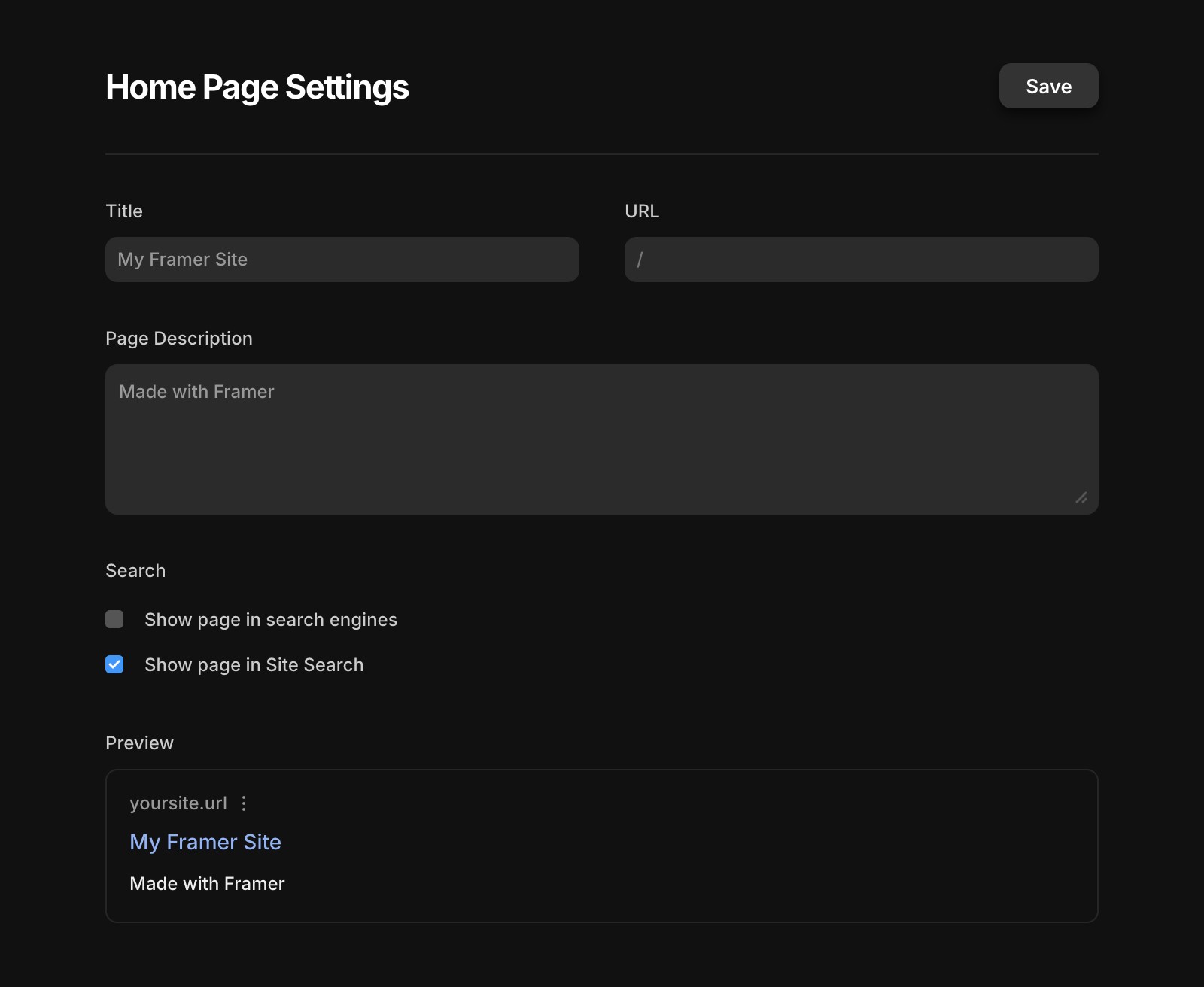

Click the gear icon in the top menu to open Page Settings.

In Page Settings, locate the option Show page in search engines. Disable this option to prevent the page from being indexed.

Save your changes and republish the site. This will add the following meta tag to the page's HTML:

Why doesn't my robots.txt file change to “Disallow” when I disable indexing for a page?

Disabling indexing for a page is handled through “noindex” meta robots tags, as recommended by Google. You can read more about this in Google’s documentation: Introduction to Robots.txt.

Using a robots.txt file to block pages from Google Search results is not recommended. If other pages link to the blocked page with descriptive anchor text, Google may still index the URL without crawling it. To effectively prevent a page from appearing in search results, use the “noindex” meta tag or methods like password protection. For more details, refer to Google’s guide: Block Search Indexing.

It’s important to note that for the “noindex” directive to work, the page must be accessible to crawlers. If the page is blocked by robots.txt or otherwise inaccessible, search engines won’t see the “noindex” directive. In this case, the page could still appear in search results if other pages link to it.

By following these guidelines, you can effectively manage which pages are indexed by search engines and control your site’s visibility.

If you're still experiencing issues, please reach out to us through our contact page for further help.